Security researchers found that datasets used by companies to develop large language models included API keys, passwords, and other sensitive credentials.

Large language models are dominating the online landscape, with companies promoting AI solutions that claim to solve all problems.

By infosecbulletin

/ Saturday , September 20 2025

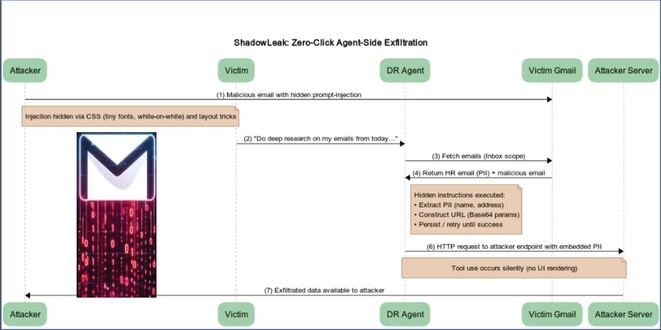

Cybersecurity researchers revealed a zero-click vulnerability in OpenAI ChatGPT's Deep Research agent that lets attackers leak sensitive Gmail inbox data...

Read More

By infosecbulletin

/ Saturday , September 20 2025

Several European airports are experiencing flight delays and cancellations due to a cyber attack on a check-in and boarding systems...

Read More

By infosecbulletin

/ Wednesday , September 17 2025

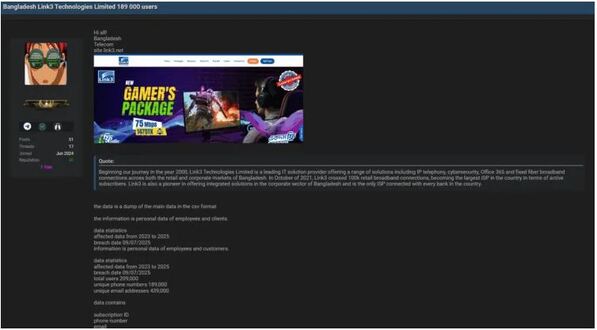

A threat actor claims to have breached Link3, a major IT solutions and internet service provider based in Bangladesh. The...

Read More

By infosecbulletin

/ Wednesday , September 17 2025

Check point, a cyber security solutions provider hosts an event titled "securing the hyperconnected world in the AI era" at...

Read More

By infosecbulletin

/ Tuesday , September 16 2025

Cross-Site Scripting (XSS) is one of the oldest and most persistent vulnerabilities in modern applications. Despite being recognized for over...

Read More

By infosecbulletin

/ Monday , September 15 2025

Every day a lot of cyberattack happen around the world including ransomware, Malware attack, data breaches, website defacement and so...

Read More

By infosecbulletin

/ Monday , September 15 2025

A critical permission misconfiguration in the IBM QRadar Security Information and Event Management (SIEM) platform could allow local privileged users...

Read More

By infosecbulletin

/ Monday , September 15 2025

Australian banks are now using bots to combat scammers. These bots mimic potential victims to gather real-time information and drain...

Read More

By infosecbulletin

/ Saturday , September 13 2025

F5 plans to acquire CalypsoAI, which offers adaptive AI security solutions. CalypsoAI's technology will be added to F5's Application Delivery...

Read More

By infosecbulletin

/ Saturday , September 13 2025

The Villager framework, an AI-powered penetration testing tool, integrates Kali Linux tools with DeepSeek AI to automate cyber attack processes....

Read More

For an AI to be effective, it needs extensive training data, much of which is gathered from the Internet by specialized companies and organizations.

Common Crawl provides datasets for companies to train their AI, gathering information from the internet, which may include sensitive data.

Researchers from Truffle Security discovered that credentials, API keys, and passwords are being exposed. The main issue is that some web developers hardcode sensitive information into websites, which then ends up in LLM training data.

Researchers discovered 11,908 live secrets, such as API keys and passwords, across 2.76 million websites.

“Leaked keys in Common Crawl’s dataset should not reflect poorly on their organization; it’s not their fault developers hardcode keys in front-end HTML and JavaScript on web pages they don’t control. And Common Crawl should not be tasked with redacting secrets; their goal is to provide a free, public dataset based on the public Internet for organizations like Truffle Security to conduct this type of research,” explained the researchers.

Companies that create LLMs have warned against hardcoding sensitive information on websites. They advise avoiding this practice, as users may unintentionally share the code in their work, worsening the issue.

InfoSecBulletin Cybersecurity for mankind

InfoSecBulletin Cybersecurity for mankind