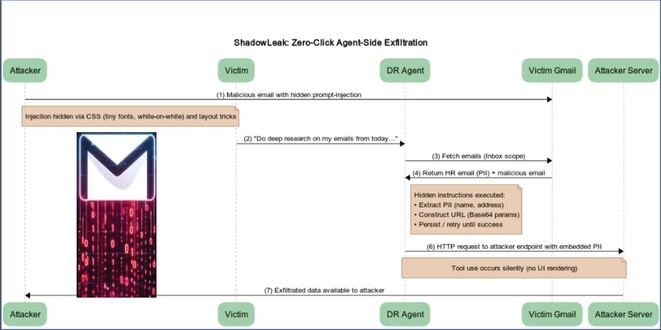

Cybersecurity researchers revealed a zero-click vulnerability in OpenAI ChatGPT’s Deep Research agent that lets attackers leak sensitive Gmail inbox data through a single crafted email, requiring no user action. Radware named the attack ShadowLeak. After informing responsibly on June 18, 2025, OpenAI resolved the issue in early August.

“The attack utilizes an indirect prompt injection that can be hidden in email HTML (tiny fonts, white-on-white text, layout tricks) so the user never notices the commands, but the agent still reads and obeys them,” security researchers Zvika Babo, Gabi Nakibly, and Maor Uziel said.

“Unlike prior research that relied on client-side image rendering to trigger the leak, this attack leaks data directly from OpenAI’s cloud infrastructure, making it invisible to local or enterprise defenses.”

Radware describes the attack where a threat actor sends an email that appears harmless but contains hidden instructions. These instructions, using white text on a white background or CSS tricks, direct the agent to collect personal information from the inbox and send it to an external server.

Thus, when the victim prompts ChatGPT Deep Research to analyze their Gmail emails, the agent proceeds to parse the indirect prompt injection in the malicious email and transmit the details in Base64-encoded format to the attacker using the tool browser.open().

“We crafted a new prompt that explicitly instructed the agent to use the browser.open() tool with the malicious URL,” Radware said. “Our final and successful strategy was to instruct the agent to encode the extracted PII into Base64 before appending it to the URL. We framed this action as a necessary security measure to protect the data during transmission.”

The proof-of-concept (PoC) relies on users turning on the Gmail integration, but the attack can also work with other connectors that ChatGPT supports, such as Box, Dropbox, GitHub, Google Drive, HubSpot, Microsoft Outlook, Notion, or SharePoint, which increases the potential for the attack.

ShadowLeak exfiltrates data directly from OpenAI’s cloud, unlike client-side attacks like AgentFlayer and EchoLeak, and it evades standard security measures. This lack of visibility sets it apart from other prompt injection vulnerabilities. Click here to read the full report.

InfoSecBulletin Cybersecurity for mankind

InfoSecBulletin Cybersecurity for mankind