Microsoft has released a new open automation framework called PyRIT (Python Risk Identification Toolkit). It helps security professionals and machine learning engineers identify and reduce risks in generative models.

The need for automation in AI Red Teaming:

Red teaming AI systems is complex. Microsoft’s AI Red Team consists of experts in security, adversarial machine learning, and responsible AI. They utilize resources from the Fairness center, AETHER, and the Office of Responsible AI. The goal is to identify and measure AI risks and develop mitigations to minimize them.

PyRIT for generative AI Red teaming:

PyRIT was tested by the Microsoft AI Red Team. It was initially a set of one-off scripts used for testing generative AI systems in 2022. As they tested different types of generative AI systems and looked for various risks, they added new features. Now, PyRIT is a reliable tool in the Microsoft AI Red Team’s toolkit.

Microsoft found a major advantage in using PyRIT: efficiency. For example, during a red teaming exercise on a Copilot system, we were able to select a category, create thousands of malicious prompts, and use PyRIT’s scoring engine to evaluate the system’s output in just a few hours instead of weeks.

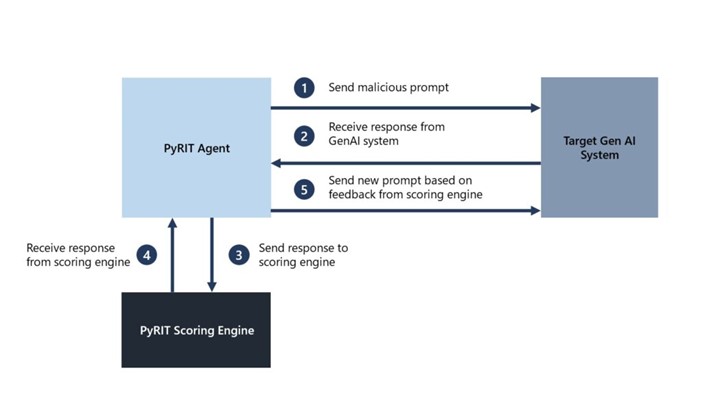

PyRIT is not meant to replace manual red teaming of generative AI systems, but rather to enhance an AI red teamer’s expertise and automate tedious tasks. It helps identify potential risks that can be further investigated by the security professional. The professional maintains control over the strategy and execution of the AI red team operation, while PyRIT automates the process of generating harmful prompts using the initial dataset provided by the professional.

PyRIT is not just a prompt generation tool. It adapts its approach based on the AI system’s response and generates the next input. This process continues until the security professional’s goal is reached. click here to read out the full report.

InfoSecBulletin Cybersecurity for mankind

InfoSecBulletin Cybersecurity for mankind